To those of you unaware of the developments in the SAS world, JSON is the new XML and the number of users who need to access JSON has really grown in the recent times. This is mainly due to proliferation of REST-based APIs and other web services. A good reason for JSON being so popular is the fact that it is structured data in the format of text. We have been capable of offering simple parsing techniques, which make use of data step and most recently PROC DS2. Now with the SAS 9.4 and Maintenance 4 we now have a built-in libname engine for the JSON XML.

As a SAS training institute we strive to keep our students updated with the latest developments in the field of data analytics even after they move on.

Let us start with a simple JSON example:

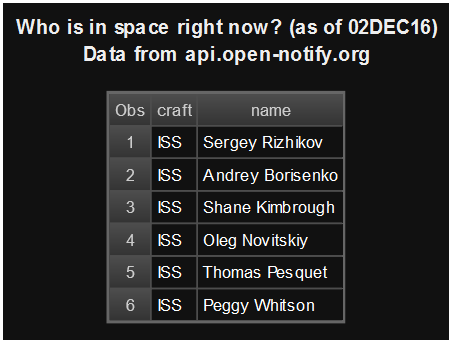

Who occupies the space right now?

When we speak of skyrocketing, it becomes necessary to talk about a new web service we discovered which is cool enough to report who is in the space at the moment (and by that we mean at least one on the international space stations). It is actually a perfect specimen of a REST API, as it does that one thing and is easily integrated into all processes, which also includes SAS. It returns with a simple stream of data, which can be mapped, easily into a tabular structure.

To further understand this, you can view our example code and the results that we ran as a part of our SAS predictive modeling training we obtained with SAS 9.4 and Maintenance 4:

filename resp temp; /* Neat service from Open Notify project */ proc http url="http://api.open-notify.org/astros.json" method= "GET" out=resp; run; /* Assign a JSON library to the HTTP response */ libname space JSON fileref=resp; /* Print result, dropping automatic ordinal metadata */ title "Who is in space right now? (as of &sysdate)"; proc print data=space.people (drop=ordinal:); run;

So, as we can see the reporting is quite simple. But what happens when ones JSON data is not so simple? JSON is capable of representing information in a nested structure, which may be multiple layers deep. Such cases may require some additional mapping to convert the JSON representation into a rectangular data table, which we can utilize for analytics and reporting.

Example of JSON map:

Most recent topics from SAS support communities:

The parsing procedure of DS2 JSON package is a robust one, but it requires a lot of background knowledge about the structure and the fields of the JSON payload. It may also require several lines of code to extract every field, which, we want.

Here is a revised pass that makes use of the JSON engine:

/* split URL for readability */ %let url1=http://communities.sas.com/kntur85557/restapi/vc/categories/id/bi/topics/recent; %let url2=?restapi.response_format=json%str(&)restapi.response_style=-types,-null,view; %let url3=%str(&)page_size=100; %let fullurl=&url1.&url2.&url3; filename topics temp; proc http url= "&fullurl." method="GET" out=topics; run; /* Let the JSON engine do its thing */ libname posts JSON fileref=topics; title "Automap of JSON data"; /* examine resulting tables/structure */ proc datasets lib=posts; quit; proc print data=posts.alldata(obs=20); run;

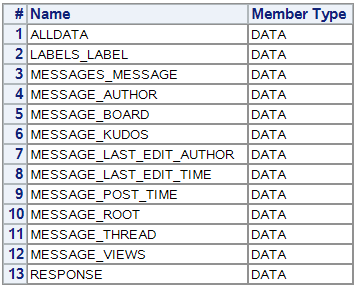

It is due to the many layers of data in the JSON response, that is was possible to generate the tables that SAS automatically creates:

A total of 12 tables which contains several components of the message data which we want, in addition the ALLDATA member which contains all things in just one linear tabular format. The ALLDATA is good for examining the structure, however, not for analysis. You may be able to see that it is basically value-name pairs with almost no data types or formats assigned to them.

Now we could make use of the DATA steps or a PROC SQL to merge the numerous tables into a single denormalized table for our purposes of reporting. However, there is still a better way, which is to apply and define a JSON map for the libname engine for use.

Firstly, to begin with we must rerun the JSON libname assignment with the AUTOMAP option. This will build an external file with the JSON formatted mapping that SAS generates automatically. And in this example the file will land in the work directory with the name as top.map.

filename jmap "%sysfunc(GETOPTION(WORK))/top.map"; proc http url= "&fullurl." method="GET" out=topics; run; libname posts JSON fileref=topics map=jmap automap=create;

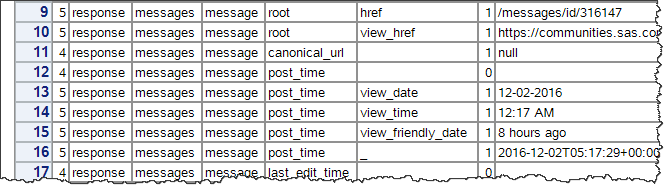

Notice that this map generated is quite long, which is over 400 lines of JSON metadata. And here is a glimpse of the file which describes a few fields in just one of the generated tables.

"DSNAME": "messages_message",

"TABLEPATH": "/root/response/messages/message",

"VARIABLES": [

{

"NAME": "ordinal_messages",

"TYPE": "ORDINAL",

"PATH": "/root/response/messages"

},

{

"NAME": "ordinal_message",

"TYPE": "ORDINAL",

"PATH": "/root/response/messages/message"

},

{

"NAME": "href",

"TYPE": "CHARACTER",

"PATH": "/root/response/messages/message/href",

"CURRENT_LENGTH": 19

},

{

"NAME": "view_href",

"TYPE": "CHARACTER",

"PATH": "/root/response/messages/message/view_href",

"CURRENT_LENGTH": 134

},

With the use of this map as a starting point, we will be able to create a new map file. This will be simpler, quite smaller, and will precisely define just the fields that we want. We will be able to reference each field by its ‘path’ in the JSON nested structure, and we can also specify the types and formats which we want to be in the final data.

In the new map we built, we removed many of the tables and fields to end up with a file that was just about 60 lines long. We also made use of sensible variable names, as well as even specified the SAS formats and informats for transforming some columns while the import process carries forward. For instance, instead of reading the message ‘datetime’ field as a character string, we almost coerced the value to be a numeric variable with format of DATETIME.

This is the code:

SAS BLOGS HOME > THE SAS DUMMY > READING DATA WITH THE SAS JSON LIBNAME ENGINE

Reading data with the SAS JSON libname engine

Chris Hemedinger

Chris Hemedinger|DECEMBER 2, 2016

35706

inShare

111

JSON is the new XML. The number of SAS users who need to access JSON data has skyrocketed, thanks mainly to the proliferation of REST-based APIs and web services. Because JSON is structured data in text format, we’ve been able to offer simple parsing techniques that use DATA step and most recently PROC DS2. But finally*, with SAS 9.4 Maintenance 4, we have a built-in LIBNAME engine for JSON.

Simple JSON example: Who is in space right now?

Speaking of skyrocketing, it is important to mention a cool web service we discovered that reports who is in space right now (at least on the International Space Station). It is actually a perfect specimen of a REST API, because it does just that needful one thing and gets easily integrated into any process, including SAS. It also returns a simple stream of data that can be easily mapped into a tabular structure. Here’s our example code and results, which we achieved with SAS 9.4 Maintenance 4.

filename resp temp; /* Neat service from Open Notify project */ proc http url="http://api.open-notify.org/astros.json" method= "GET" out=resp; run; /* Assign a JSON library to the HTTP response */ libname space JSON fileref=resp; /* Print result, dropping automatic ordinal metadata */ title "Who is in space right now? (as of &sysdate)"; proc print data=space.people (drop=ordinal:); run; JSON who is in space

But what if your JSON data isn’t so simple? JSON can represent information in nested structures that can be many layers deep. These cases require some additional mapping to transform the JSON representation to a rectangular data table that we can use for reporting and analytics.

JSON map example: Most recent topics from SAS Support Communities

The parsing process is robust to call SAS Support Communities API using the DS2 JSON package, but it requires quite a bit of fore knowledge about the structure and fields within the JSON payload. It also requires many lines of code to extract each field that I want.

Here’s a revised pass that uses the JSON engine:

/* split URL for readability */ %let url1=http://communities.sas.com/kntur85557/restapi/vc/categories/id/bi/topics/recent; %let url2=?restapi.response_format=json%str(&)restapi.response_style=-types,-null,view; %let url3=%str(&)page_size=100; %let fullurl=&url1.&url2.&url3; filename topics temp; proc http url= "&fullurl." method="GET" out=topics; run; /* Let the JSON engine do its thing */ libname posts JSON fileref=topics; title "Automap of JSON data"; /* examine resulting tables/structure */ proc datasets lib=posts; quit; proc print data=posts.alldata(obs=20); run;

Thanks to the many layers of data in the JSON response, here are the tables that SAS creates automatically.

json Auto tables

12 tables contain various components of the message data that one may want, as well as the ALLDATA member that contains everything in one linear table. ALLDATA is good for examining structure, but not for conducting analysis. You can see that it’s mostly all name-value pairs with no data types/formats assigned.

json ALLDATA

We could make use of DATA steps or PROC SQL to merge the various tables into a single de-normalized table for my reporting purposes, but there is a better way: define and apply a JSON map for the libname engine to use.

To get started, we need to rerun our JSON libname assignment with the AUTOMAP option. This creates an external file with the JSON-formatted mapping that SAS generates automatically. In this example here, the file lands in the WORK directory with the name “top.map”.

filename jmap "%sysfunc(GETOPTION(WORK))/top.map"; proc http url= "&fullurl." method="GET" out=topics; run;

libname posts JSON fileref=topics map=jmap automap=create;

This generated map is quite long — over 400 lines of JSON metadata. Here is a glimpse of the file that describes a few fields in just one of the generated tables.

"DSNAME": "messages_message",

"TABLEPATH": "/root/response/messages/message",

"VARIABLES": [

{

"NAME": "ordinal_messages",

"TYPE": "ORDINAL",

"PATH": "/root/response/messages"

},

{

"NAME": "ordinal_message",

"TYPE": "ORDINAL",

"PATH": "/root/response/messages/message"

},

{

"NAME": "href",

"TYPE": "CHARACTER",

"PATH": "/root/response/messages/message/href",

"CURRENT_LENGTH": 19

},

{

"NAME": "view_href",

"TYPE": "CHARACTER",

"PATH": "/root/response/messages/message/view_href",

"CURRENT_LENGTH": 134

},

By using this map as a starting point, we can create a new map file — one that is simpler, much smaller, and defines just the fields that one wants. We can reference each field by its “path” in the JSON nested structure, and can specify the types and formats that we would want in the final data.

In our new map, we removed many of the tables and fields and ended up with a file that was just about 60 lines long. We also applied sensible variable names, and even specified SAS formats and informats to transform some columns during the import process. For example, instead of reading the message “DATETIME” field as a character string, we somewhat coerced the value into a numeric variable with a DATETIME format:

{

"NAME": "datetime",

"TYPE": "NUMERIC",

"INFORMAT": [ "IS8601DT", 19, 0 ],

"FORMAT": ["DATETIME", 20],

"PATH": "/root/response/messages/message/post_time/_",

"CURRENT_LENGTH": 8

},

The new map file we created is named as ‘minmap.map’ and is then re-issued as the libname without any AUTOMAP option.

filename minmap 'c:\temp\minmap.map'; proc http url= "&fullurl." method="GET" out=topics; run; libname posts json fileref=topics map=minmap; proc datasets lib=posts; quit; data messages; set posts.messages; run;

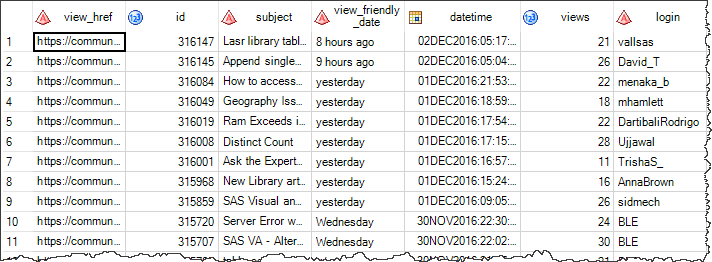

The snapshot of a single dataset, which we obtained as a result, is mentioned here:

We believe that you would also concur with us on the fact that this result is much more feasible that what our first pass generated. In addition, the amount of code is much less, so it is easier to maintain than the previous SAS-based process for reading JSON.

To learn more about SAS predictive modelling take up SAS certification course from DexLab Analytics, now we are in Pune as well.

This post originally appeared on – blogs.sas.com/content/sasdummy/2016/12/02/json-libname-engine-sas

Interested in a career in Data Analyst?

To learn more about Machine Learning Using Python and Spark – click here.

To learn more about Data Analyst with Advanced excel course – click here.

To learn more about Data Analyst with SAS Course – click here.

To learn more about Data Analyst with R Course – click here.

To learn more about Big Data Course – click here.

online certification, online courses, SAS, Sas course, SAS Predictive Modelling

Comments are closed here.